MIT CSAIL

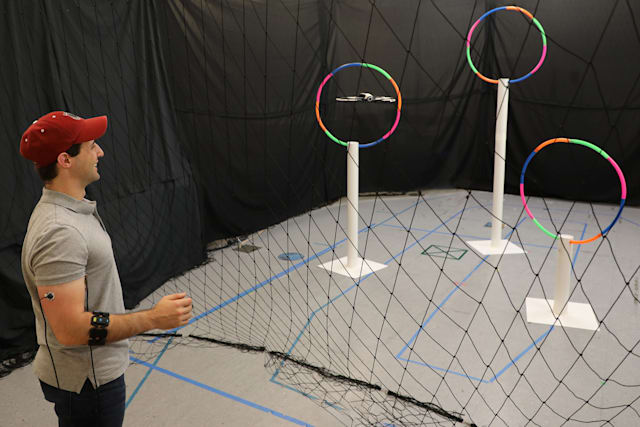

There might be a more intuitive way to control robots and drones than waggling joysticks or tapping at a screen. MIT CSAIL researchers have developed a control method,Conduct-A-Bot,that uses muscle sensors and motion detection for more ‘natural’ robot control. Algorithms detect gestures using both your movement as well as the activity in your biceps,forearms and triceps. You can wave your hand,clench your fist or even tense your arm to steer the bot.

The system doesn’t need environmental cues,offline calibration or per-person training. You could just start using it,in other words.

CSAIL’s tech isn’t ready for real-world use. A Parrot Bebop 2 drone responded to 82 percent of over 1,500 gestures — promising,but not what you’d depend on in a vital situation. The scientists intend to refine the technology,though,including the option of custom or more continuous gestures. They’ll ideally learn from the commands to better understand input or learn to navigate on their own.

If the technology does escape the lab,it could make robot control more accessible to people who’d otherwise be intimidated. It could also be helpful for remote exploration,personal robots and other tasks where you may want the more organic control of a human for tricky situations.

加载中,请稍侯......

加载中,请稍侯......

Comments